Do you have a question? Want to learn more about our products and solutions, the latest career opportunities, or our events? We're here to help. Get in touch with us.

Do you have a question? Want to learn more about our products and solutions, the latest career opportunities, or our events? We're here to help. Get in touch with us.

We've received your message. One of our experts will be in touch with you soon.

With ChatGPT being described as a "viral mega-hit", we asked Datacom's Emerging Technology Practice Lead Dr Hazel Bradshaw about the transformational opportunities and potential pitfalls of the AI chatbot.

There is the opportunity to ‘democratise the written word’. ChatGPT can generate coherent text from simple ‘prompts’ and so has the potential to reduce barriers to written communication, especially when it is combined with voice input. For some people this will overcome their current barriers and make it easier to express themselves in writing. One example of this that we are all familiar with is trying to write the perfect resume or cover letter. ChatGPT can help people present their skills and knowledge in a professional style that may not come naturally to them.

In the short term, we’ll likely see it being used in a professional capacity in productivity and efficiency gains. As humans we do a lot of communicating, a lot of that is written language. But writing comes with a high cognitive load. It takes time and energy to turn internal thoughts into coherent, readable external text. Having a ‘quasi-intelligent’ text generation tool can really help speed things up.

The second area will be “search and curation”. Having an AI assistant run your search queries for you and return a written summary of what it found, will make a big difference. Although there are many pitfalls that need to be worked out to ensure we don’t get caught in echo chambers of false, biased or hateful content.

In the near future we’ll see other elements coming in such as improved customer services applications, enhanced language translation, advanced digital assistants like Alexa Siri or Google Assistant. One really important opportunity will be around improved assistive technologies for those with disabilities.

That people think ChatGPT is smarter than it is. It is intelligent in a very particular way and that is creating “human acceptable” text. Language is so key to humans sharing their experiences and learning concepts there is a real risk is that people will fall into the trap of thinking ChatGPT is thinking. It’s not. It is returning the most probable response based on a huge body of text data it’s been trained on.

It is not reasoning in the same way a human is reasoning when we come up with an answer. So the responses it generates can easily be incorrect or biased or plain fictional. Our risk is that we don’t apply our critical thinking skills and that we take what it returns as face value. This is why supplying well formulated ‘prompts’ are critical to generating effective text outputs.

There is also the larger risk of perpetuating biased viewpoints that exist in human generated text content. Our biases are embedded in our text output. Sexism, racism and colonialism. Training large language models like OpenAI’s GPT or Google LaMDA on uncorrected or poorly moderated data sources only reinforces those biases. I’m yet to see evidence in market where the industry is building in data correction to mitigate against this.

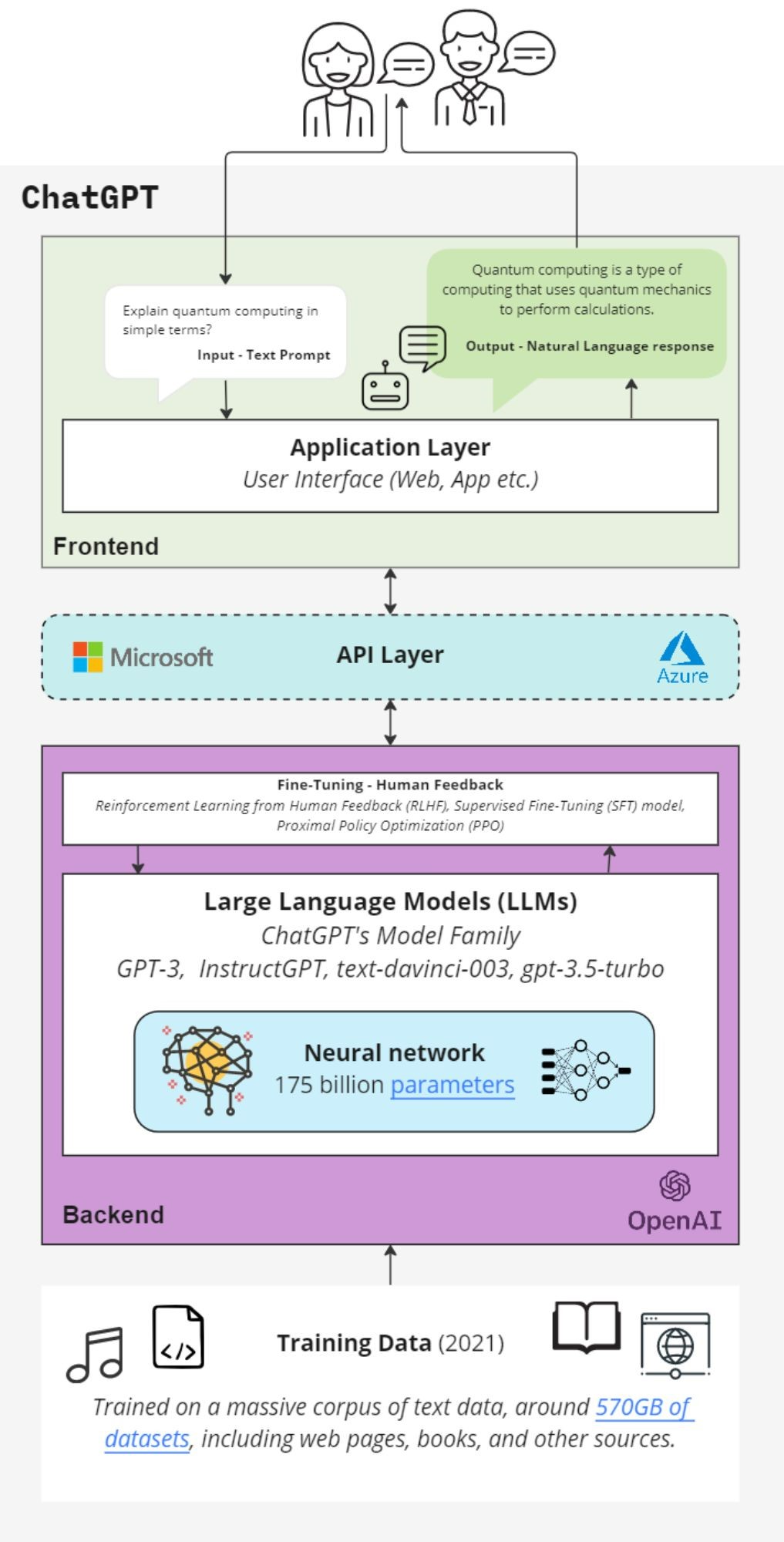

The training data for the current release was limited to data ingest up to 2021. So, it is limited in what it knows after that point, until the training data set has been updated. Companies will be able to add their own data to get more results via API access. This data will be used to inform the models and the origin data is only held for 30 days, to ensure data privacy.

The current release of ChatGPT from OpenAI allows for fine tuning of conversational responses through human feedback. This feedback activity helps ChatGPT generate more humanlike text or code responses. When we, as users, provide feedback we are not adding to the underlying data that creates ChatGPT’s neural network (brain).

A few of my key observations so far:

We’ve been experiencing the application of ‘Artificial Narrow Intelligence’ (ANI) or narrow AI for some time. Narrow or weak AI performs a specific task or tasks and it has no capacity for self-awareness or independent thought. ChatGPT is another version of narrow AI, one that is focused on the task of answering questions and engaging in conversations. It just happens to be very convincing at it.

Other examples of narrow AI we will all be familiar with include speech recognition software, image recognition systems, and recommendation algorithms.

Here are a few examples of AI we all experience in our lives but might not think of us AI:

That AI can think independently and has independent intelligence. We are all used to seeing the sci-fi plotline that AI will become sentient and take over. This won’t happen. AI relies on us to learn, we are a model for its development. It wouldn’t know how or why it would separate itself from us. It will be part of our mutually beneficial ecosystem. Symbiotic, like most life on this planet.

This could be an endless list! AI can generally do mundane repetitive tasks at lighting speed compared to a person. The reason we like ChatGPT so much is because it can write something useful 100x faster than we ever could. Removing some of the cognitive load on people with busy work and family lives is a massive benefit.

There are the obvious fields that this technology can be allied to, such as agriculture, finance and transportation but these applications are often very functional and once removed from people. For real improvement to people lives, I think having AI augmented wellbeing is something we will embrace.

Loneliness is a universal human condition. As social creatures, we all crave connection but, sadly, with the world's population rapidly ageing, loneliness is becoming an epidemic. AI offers hope of an answer – by combining AI with robotics and language translation, we can harness the power of technology to tackle this issue head-on. AI has the potential to revolutionise how we connect with each other and provide personalised support, no matter where we are in the world.

Dr. Hazel Bradshaw is a specialist in futures thinking, design insight, and digital transformation strategies. In her role as the Emerging Technology Practice Lead for Datacom, Australasia's largest homegrown tech company, Hazel concentrates on identifying growth areas for technology adoption. Her aim is to create new business opportunities for customers while minimising the implementation risk.